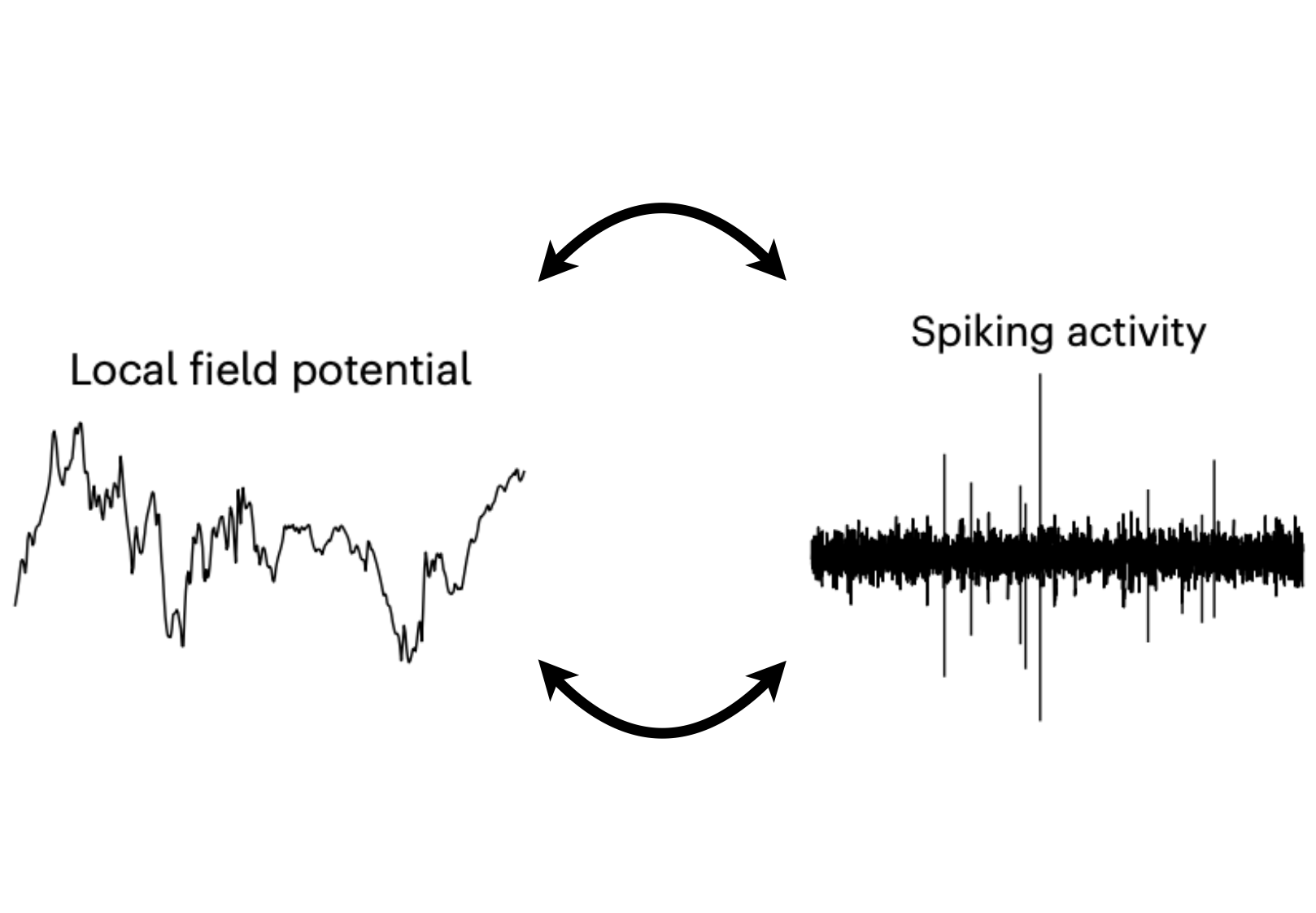

We've learned a lot about the brain by studying the activity of individual neurons, called spikes, in animal brains. However, a different measure of brain activity, called field potentials, is more often measured when recording from the brains of human patients, especially in a clinical context. In order to take what we've learned from studying animal brains and apply it to humans, we need to understand how the neural representations we observe in spikes are related to those in field potentials. To address this, I analyzed a dataset with established spiking representations of visual memory to answer a simple question: would the same inferences about the neural representations supporting memory have been made if measures were limited to field potentials? Across many rigorous tests, the answer is resoundingly (and excitingly) yes when considering high-gamma activity (50-150 Hz) of the field potential. This work suggests that a type of neural representation called a magnitude code is well-positioned for translation across model species. More generally, these results show that high-gamma activity is a robust, sensitive, efficient, and common neural measurement that allows for both reliable translation across scales of measurement and species, supporting translational work.

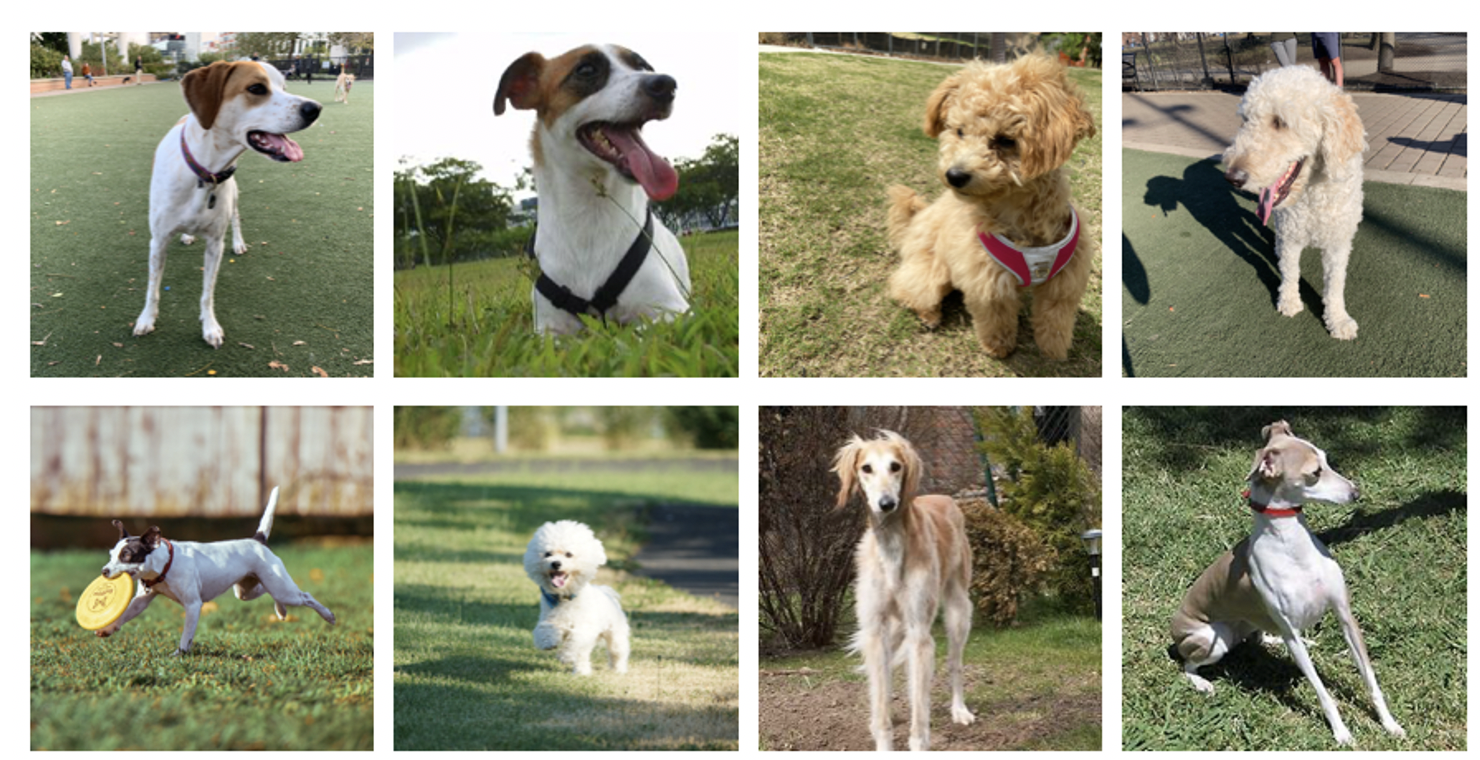

Humans are really good at remembering what they see, but the same image can become more or less memorable depending on what other things you've recently seen. For example, we're much less likely to remember a particular dog when we've just seen 10 other dogs than we are if we see that same dog after seeing 10 other objects. This type of flexible processing is something that makes our brains more than just a static computer that always produces the same output for a given input. This project asks a simple question: What changes about the neural representation of a given image such that it's better remembered in one context and not another? Answering this question is essential for understanding how visual memory operates in naturalistic contexts where the set of objects we're looking at is constantly changing. Our investigations into this question are ongoing.

Juvenile zebra finches are often studied as a model of motor learning because of the remarkable ability of juveniles to learn highly complex and stereotyped songs from a tutor. Such learning requires the integration of information in cortico-basal ganglia loops carrying motor signals with information in evaluative loops that assess and refine the bird’s song. Cortical area AId is a compelling site for such integration as the motor and evaluative loops converge in juveniles via a collateral that disappears in adulthood after their song has been learned. To test whether altering activity in AId can prevent accurate copying of tutor song, I helped develop a paradigm in which the singing of a specific syllable is paired with optogenetic disruption of AId in juveniles. Work is still being done to determine the impact of these disruptions.

Most tests of face recognition implicitly assume it to be an undifferentiated ability. However, several independent components could comprise face recognition proficiency, such as those for the perceptual discrimination of faces, face memory and the ability to generalize across viewpoints. I designed a behavioral task to separate two of these potential components: proficiency for the perceptual discrimination of faces and proficiency for face memory. Using this task, I found evidence on the group level that these are two independent processes. My analyses also suggest that these two proficiencies may be uncorrelated on an individual basis, challenging the generally held assumption that face recognition is a general ability that cannot be differentiated into independent components.

Watch a demo of the task or check out the poster or read the preprint!

Previous studies have demonstrated that recognizing an unfamiilar face rotated in depth is difficult, but have not offered explanations as to why. We quantified the difference in representation imposed by rotating a face using the gabor jet model, and compared this to the representational difference between two test faces. The gabor jet model has previously been shown to correlate strongly with perceptual discrimination of similar faces. We found that two factors were sufficient to account for the difficulty of any given trial: 1) The orientation disparity between the matching and sample images and 2) the similarity between the match and distractor images. With modest rotations of just 13° or 20°, we documented a before unappreciated huge difference in representation imposed by rotating a face in depth that could account for the difficulty of this task.

Watch a demo of the task or check out the poster or read the paper!

In 2002, Graham Hole showed that we have a remarkable capacity to recognize familiar faces that have been stretched vertically or horizontally by a factor of 2. Since this discovery, no explanation has been given for this invariance. One possibility is that familiarity with a face facilitates invariance to stretch similarly to how familiarity has been shown to facilitate invariance to rotation in depth. To test this, I designed a behavioral task to determine if familiarity with a face could also explain invariance to stretch by having subjects rate their familiarity with a number of celebrities used in the task. My results show that familiarity cannot account for stretched face recognition. After rejecting the possibility that faces are “un-stretched” by warping them to an average face we suggest that the percept of an elongated face provides a signal for the shrink-wrapping of receptive fields to conform to an attended object, a phenomenon witnessed in single unit activity in the macaque by Moran and Desimone (1985). Such a phenomenon may serve, more generally, as the underlying neural mechanism for object-based attention.

Watch a demo of the task or check out the poster or read the preprint!

When channel surfing or walking about on campus, a familiar face elicits a feeling of quick, facile recognition, aided by the predictability of who we might encounter at that time and venue. Although the subjective impression is one of immediate and automatic recognition independent of context, to what extent is this true? To address this question I designed an experiment with taxing temporal limitations (presentation times of 133-168 msec/face) and maximum uncertainty (the identity of the target was not known). Neurotypical subjects averaged about 75% overall accuracy in detecting the presence of a celebrity. Not only this, but accurate detection–indicating that a celebrity was present in the sequence–was nearly always accompanied by identification of the target. Such successful detection and identification under demanding conditions indicates a remarkable facility of face recognition for neurotypical individuals. Prosopagnosics, those who struggle with face recognition, were significantly worse, with some performing at chance. They too, however, almost always were able to identify any celebrity that they detected, demonstrating that when we sense that a face is familiar, we almost always know who it is (although we sometimes block on the name).

Watch a demo of the task or check out the poster or read the paper!